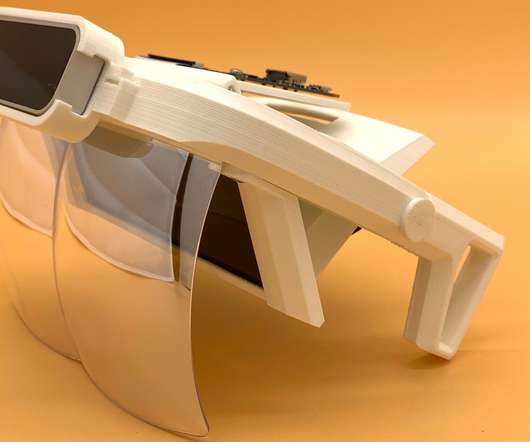

Leap Motion ‘Virtual Wearable’ AR Prototype is a Potent Glimpse at the Future of Your Smartphone

Road to VR

MARCH 23, 2018

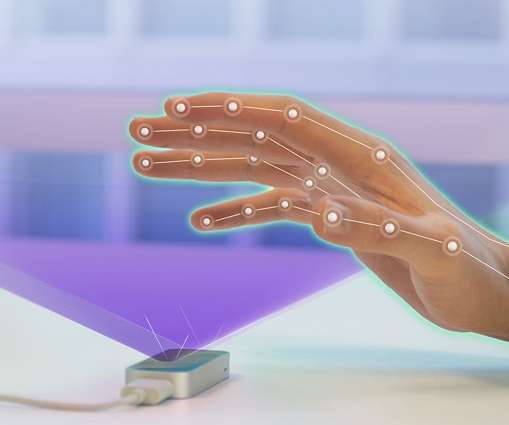

Leap Motion , a maker of hand-tracking software and hardware, has been experimenting with exactly that, and is teasing some very interesting results. Leap Motion has shown lots of cool stuff that can be done with their hand-tracking technology, but most of it is seen through the lens of VR.

Let's personalize your content